Add your predictions. I will not trade heavily (i may make small predictions with 1 mana for myself) because my subjective judgement might come into play.

Resolves YES to those that are more or less correct (resolves according to the spirit) or to 50% (if technically true but not in spirit) or to NO (if not true).

All predictions will be evaluated upon a release, if there ever is one.

Do not submit spam or irrelevant options, those will be N/A'd

Inspired by this tweet (by @Mira )

If no GPT-5 is released this decade, the market will be N/A'd. You can trade on whether that will happen here:

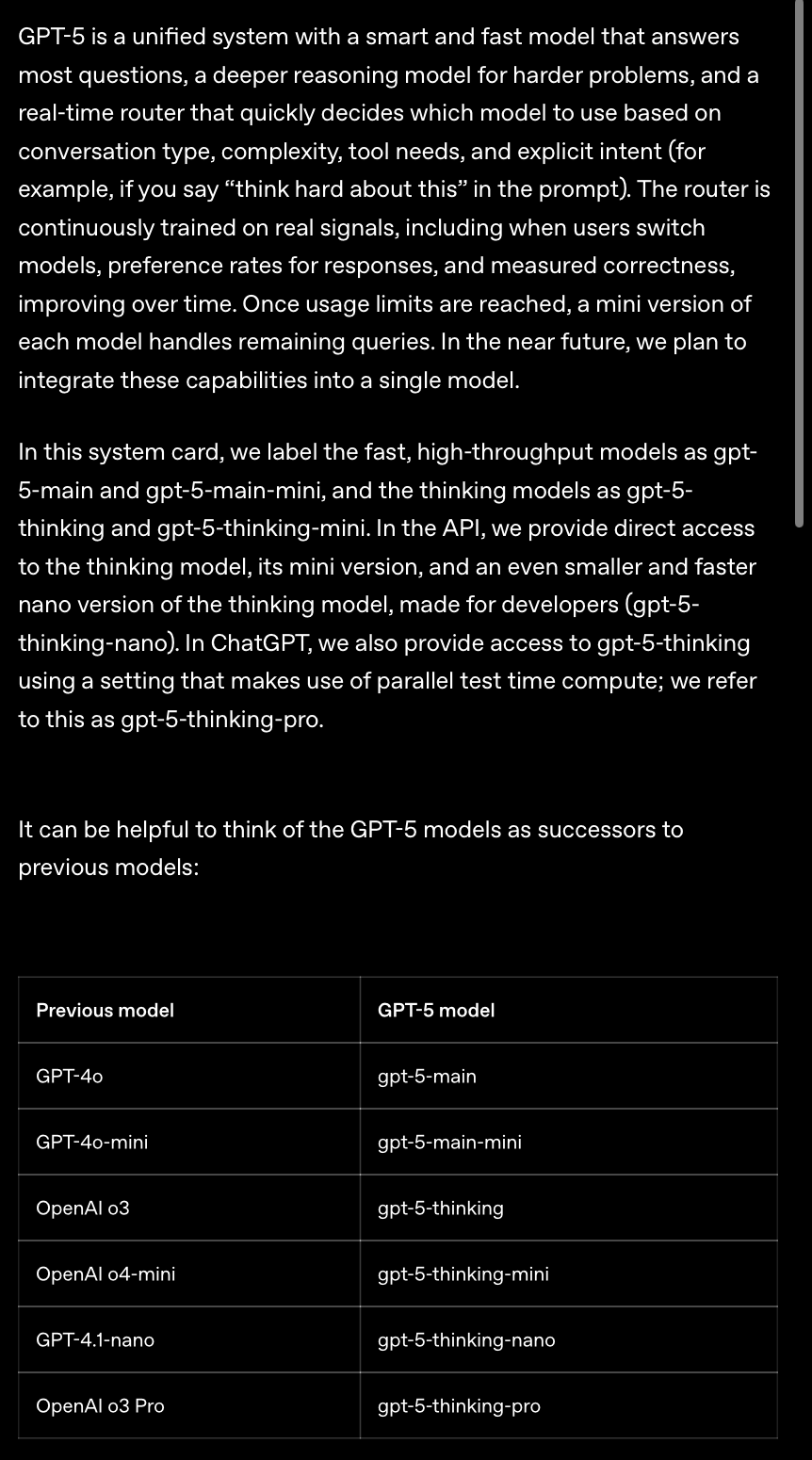

@ChinmayTheMathGuy meaning 1 unified model that routes between submodels

Both Mark Chen (interview with Matthew Berman) and Sam Altman (interview with Cleo Abram)

mentioned this. It's also in their model card.

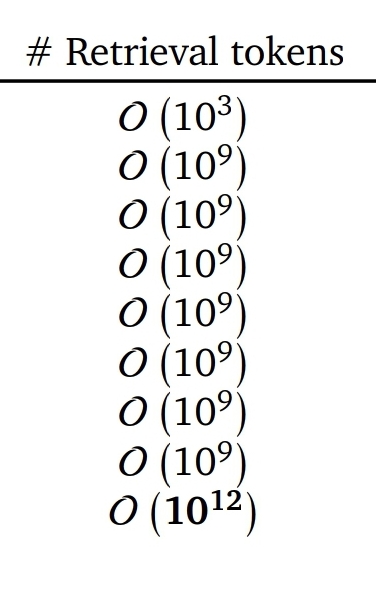

WTF why do Google scientists not understand how big O notation works? I've seen people misuse big O notation like this in every day use but in a paper this is too much

To clarify, this needs to be something significantly different. Something like Flash-attention that's just a faster version of transformers won't count. It doesn't necessarily need to not contain transformers, but it needs to be a bigger change than an improvement to a single aspect of the architecture or a better implementation