Prediction #5 from:

My Takeaways From AI 2027 - by Scott Alexander

Must be a state of the art model, used by millions of people, not just a research project.

Update 2025-07-26 (PST) (AI summary of creator comment): The creator has stated they will likely defer to Scott Alexander's interpretation if he writes about this topic in the future.

Otherwise, the guiding principle is that the market will resolve to YES if humans are unable to follow the thinking process the AI is using. The creator stated this would likely include cases where:

The AI's thinking is a mixture of modalities (e.g., image, video, and text).

The AI's chain-of-thought starts as intelligible human language but becomes unintelligible after further training.

The AI's internal representations were never intelligible to humans to begin with.

Suppose we train a model jointly on images / video / and text, and it thinks in a mixture of image / video / and text, how does this resolve?

Or -- is the intent here to resolve positively in cases where the CoT is normally very hard to understand by humans, but where it "started" as intelligible before RL?

Or rather, to resolve positively only in cases where there was no CoT to begin with, that it was some long-as-vector-based representation from the start?

Or... to resolve positively in both cases?

@1a3orn I'll probably defer to Scott Alexander's interpretation if he writes about it.

But otherwise, the principle is that humans should be able to follow the thinking process that an AI is using. So I would likely resolve YES in all of those cases.

@TimothyJohnson5c16

> But otherwise, the principle is that humans should be able to follow the thinking process that an AI is using.

Fair enough. Seems like a pretty fuzzy boundary, though. Like if we switched to diffusion models, imo, you might be able to follow the thought of a LLM less than with an autoregressive diffusion model -- but still much more than I can follow your thought, who has no CoT visible to me at all.

@1a3orn Yeah, I agree it's pretty fuzzy. I'm open to suggestions if you think of a better way to draw the boundary, but it's hard to predict what AI developments might happen in the next five years.

@eapache The idea has been explored, but that's not what I had in mind as a "major AI model".

I think I'll change the title to "state-of-the-art" to make it a little clearer - I'm thinking of something that's used by millions of people, not just a research project.

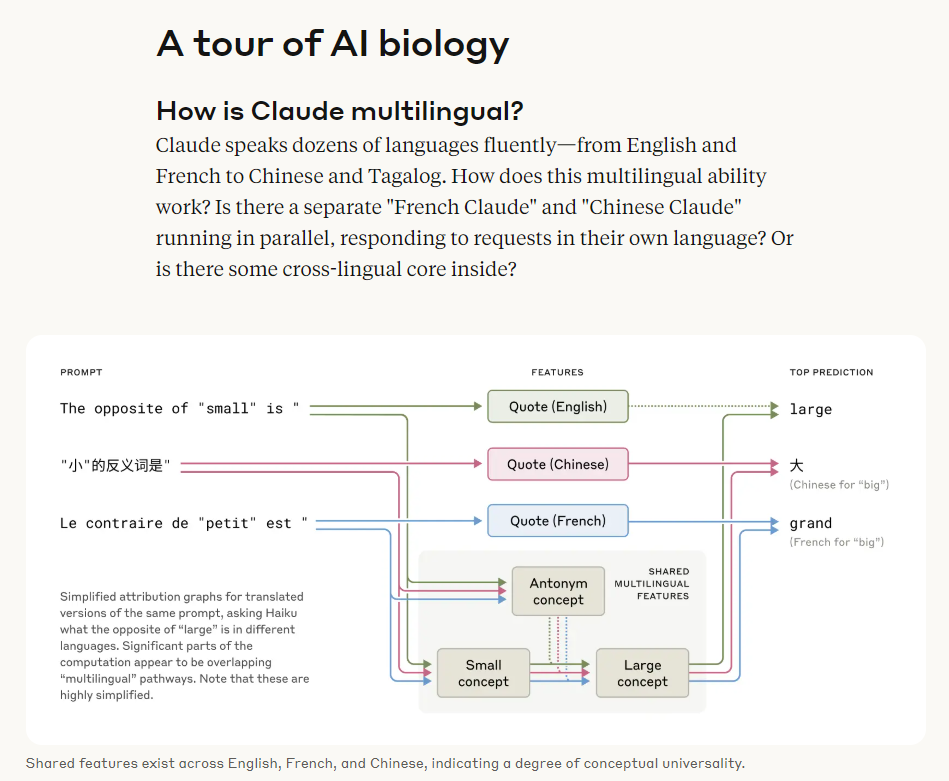

I'm confused by this - doesn't the presence of similar features regardless of the "language" the model is using essentially imply that 1. if we have good interpretability, neuralese won't be hard to decipher, and 2., they won't need to be specifically trained for this/it won't confer much benefit?

@TimothyJohnson5c16 The development or prevention of neuralese is a pivotal act determining whether alignment succeeds in the story. The story implies there's a lot of pressure pushing this to happen.